Last updated on 6 April 2025

Please note if you are using DSM7.2 or higher you should use the Container Manager version of this guide from the menu.

This guide has reached the end of its updates as most people are now on the latest DSM update - This guide is correct as of 08/12/2023 however no further updates will be added.

Important or Recent Updates

| Historic Updates | Date |

|---|---|

| New guide published | 28/07/2022 |

| AnalogJ has been busy notification settings updated | 05/08/2022 |

| Small tweak to the collector.yaml section and also the compose file to ensure NVME cache drives are shown correctly | 18/08/2022 |

| Added an extra section on how to secure the InfluxDB via environment variables | 19/01/2023 |

| Compose version number removed and small wording amendments | 09/04/2023 |

| Amended the path to save the compose file – this is for security, so the container has no access to the file contents. | 14/04/2023 |

| Amended SSH section to line up with the latest version of the guide in the 7.2 section | 16/10/2023 |

What is Scrutiny?

Scrutiny is a Hard Drive Health Dashboard & Monitoring solution, merging manufacturer provided S.M.A.R.T metrics with real-world failure rates.

Let’s Begin

In this guide I will take you through the steps to get Scrutiny up and running in Docker.

In order for you to successfully use this guide please set up your Docker Bridge Network first.

Scrutiny currently cannot be setup via the DSM Docker UI, so we will be using Docker Compose to get things up and running, this is not as complicated as it seems.

Getting our drive details

We need to get some details about our drives in order for Scrutiny to read their SMART data.

It’s time to get logged into your Diskstation via SSH, in this guide I am using Windows Terminal however the steps will be similar on Mac and Linux,

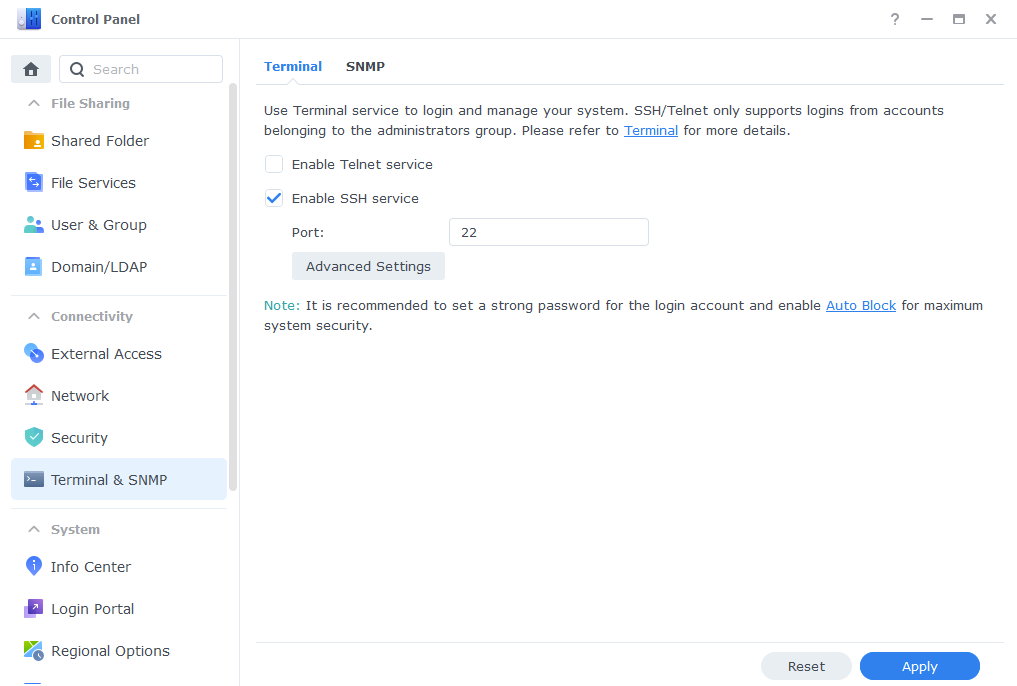

Head into the DSM Control Panel > Terminal & SNMP and then enable SSH service.

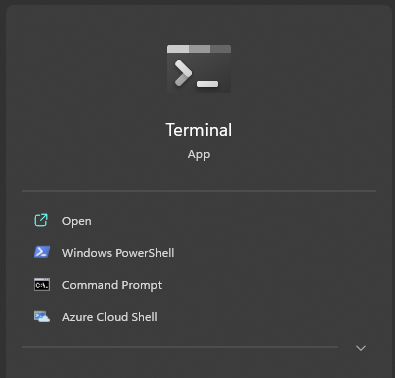

Open up ‘‘Terminal’

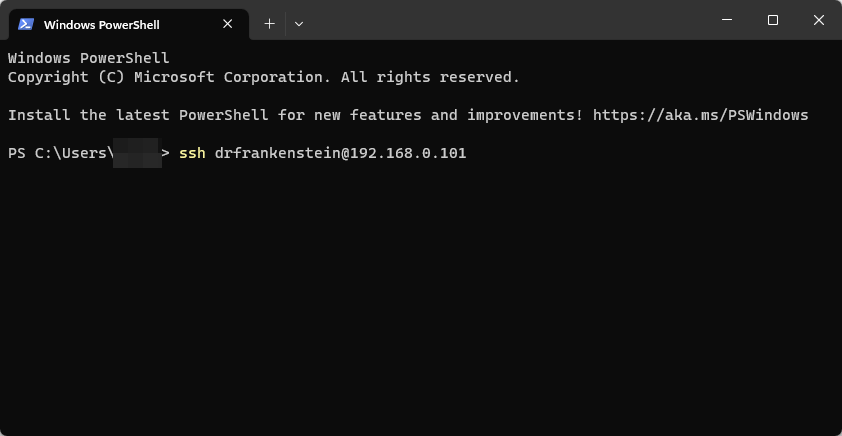

Now type ‘ssh’ then your main admin account username @ your NAS IP Address and hit Enter

ssh drfrankenstein@192.168.0.101

You will then be asked to enter the password for the user you used you can either type this or right click in the window to paste (you won’t see it paste the info) then press enter.

Enter the login information for your main Synology user account, you will not be able to see the password as you type it. (If you are using a password manager right-clicking in the window will paste – you won’t be able to see it)

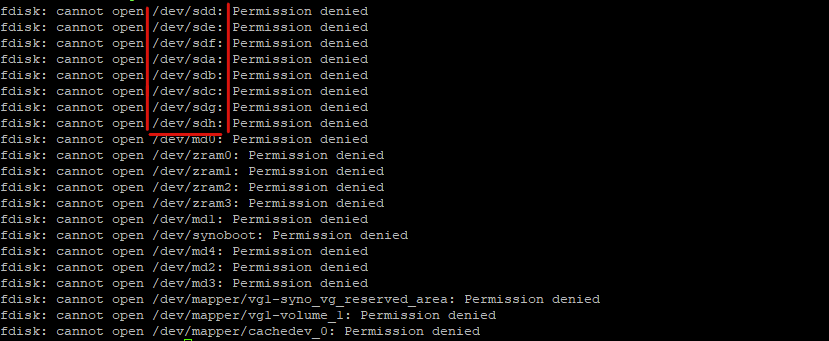

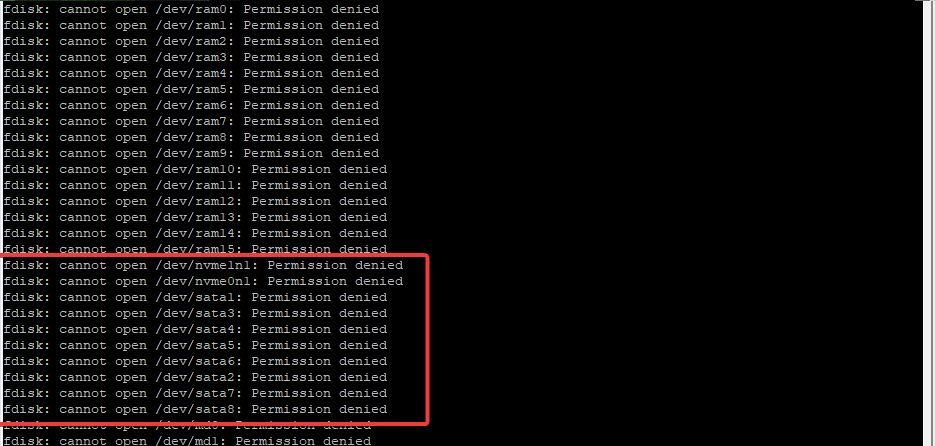

Now we are logged in we just need to do a single command to see our drives, note I am not prefacing this command with sudo as we don’t need the low level detail. You will see permission denied errors, but these can be ignored.

fdisk -lThe output you will see depends on the model NAS you own, the two examples below are from an 1821+ and an 1815+ which have 8 bays and the 1821+ has up to 2 NVMEs.

The 1815+ has 8 drives broken down from sda to sdh

The 1821+ has 8 drives broken down into SATA and NVME devices, sata1 to sata8 with the single nvme0n1.

Make note of the devices you see in your output as we will need them for the config file and compose.

Config Files and Folders

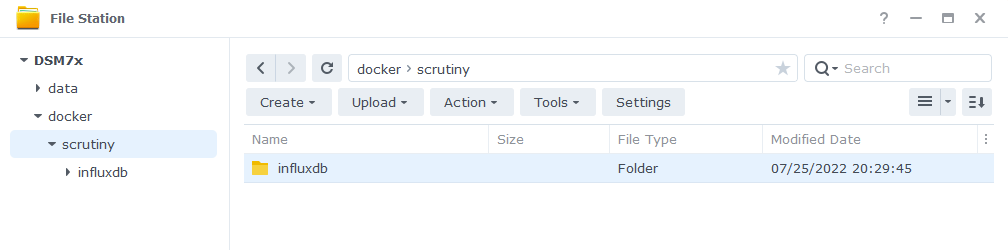

Next let’s create the folders the container will need. Head into FileStation and create a subfolder in the ‘docker’ share called ‘scrutiny’ and then within that another called ‘influxdb’ it should look like the below.

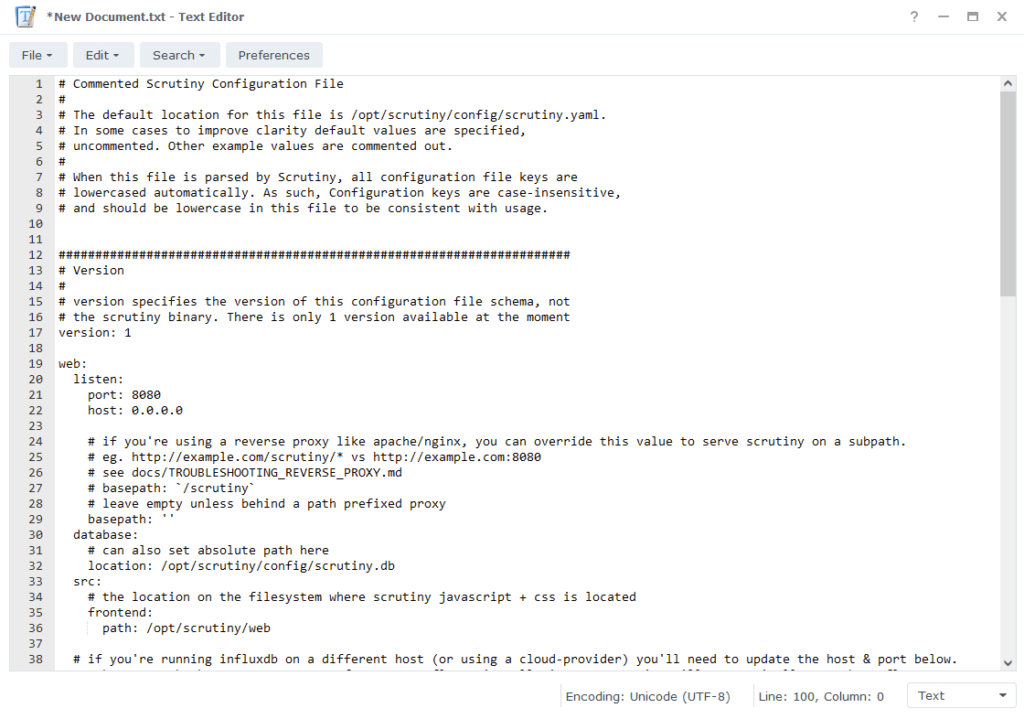

Next comes the config files, you can edit this file in a number of ways, but to keep the guide OS-agnostic we will be using the Synology Text Editor package which can be installed via Package Center.

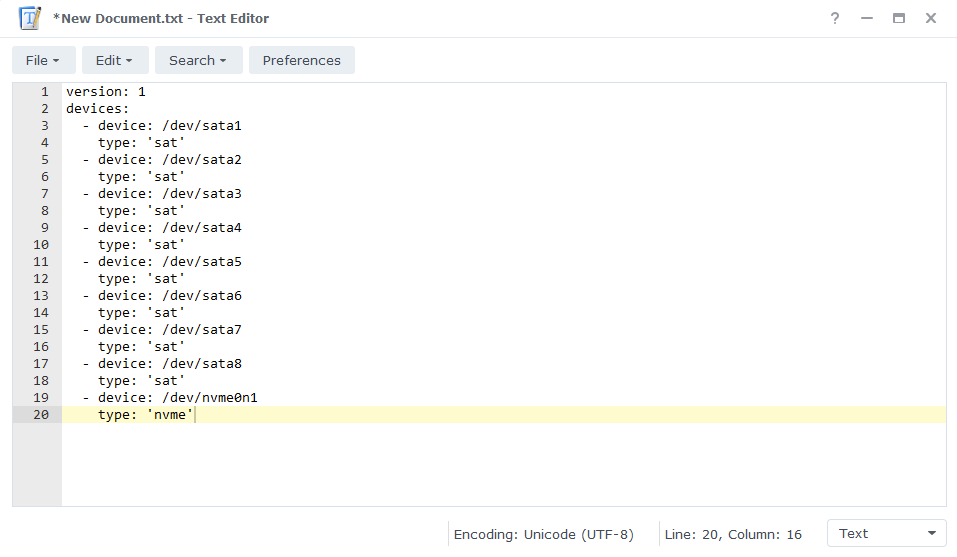

Open up a new text document and paste one of the two code snippets below into it. Use the one that matches up with the way your drives are shown in the previous step (if you come across anything different let me know in the comments!)

Type 1

version: 1

host:

id: ""

devices:

- device: /dev/sata1

type: 'sat'

- device: /dev/sata2

type: 'sat'

- device: /dev/sata3

type: 'sat'

- device: /dev/sata4

type: 'sat'

- device: /dev/sata5

type: 'sat'

- device: /dev/sata6

type: 'sat'

- device: /dev/sata7

type: 'sat'

- device: /dev/sata8

type: 'sat'

- device: /dev/nvme0n1

type: 'nvme'

- device: /dev/nvme1n1

type: 'nvme'Type 2

version: 1

host:

id: ""

devices:

- device: /dev/sda

type: 'sat'

- device: /dev/sdb

type: 'sat'

- device: /dev/sdc

type: 'sat'

- device: /dev/sdd

type: 'sat'

- device: /dev/sde

type: 'sat'

- device: /dev/sdf

type: 'sat'

- device: /dev/sdg

type: 'sat'

- device: /dev/sdh

type: 'sat'You will need to edit the config file in line with the number of drives you had in the output earlier either adding or removing lines accordingly, including adding or removing the NVME drives.

Next you can save this file as ‘collector.yaml’ in the ‘/docker/scrutiny’ folder.

Notifications Config (optional)

This step is optional and depends on if you want to set up some notifications in case one of your drive has issues.

As of writing there are 14 different notification method, as you can imagine I cannot cover every single type in this guide, but this will get the config file in place for you to amend based on your preferences

Open up a new file Text Editor again, this time you need to copy and paste the full contents of the example config file located here

Scroll to the bottom of the file where you will see a number of config options for notifications. You will need to the remove the # from the ‘notify’ and ‘urls’ lines and then depending on which type of notification you decide to set up the # will need to be removed from the corresponding line.

The level of notification you receive (Critical or All Issues) can be set up in the WebUI once Scrutiny is up and running.

Finally, save this file as ‘scrutiny.yaml’ into the /docker/scrutiny folder.

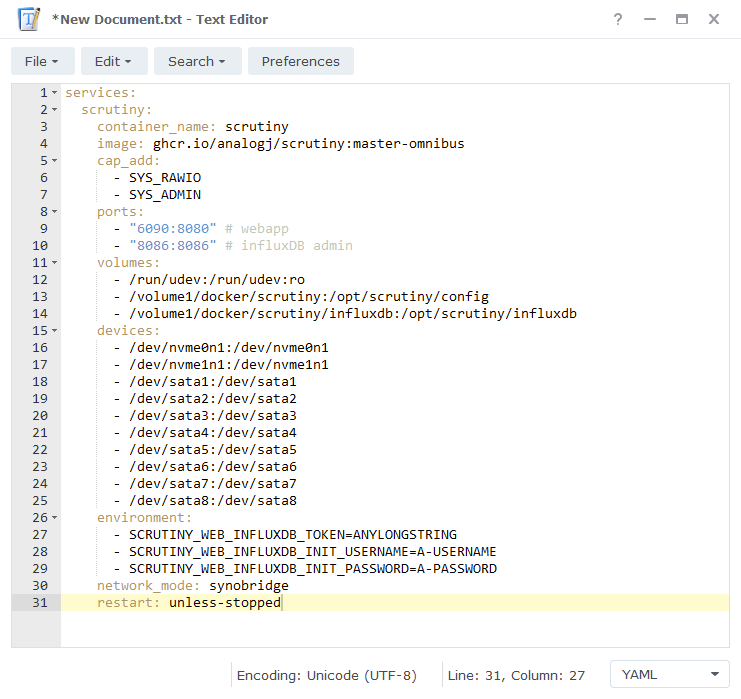

Docker Compose File

We will be using Docker Compose to set up the container. In a nutshell we will be creating a text file (YAML formatted) which tells Docker exactly how we want it set up.

Open up a new file in Text Editor again and copy the code below into it.

services:

scrutiny:

container_name: scrutiny

image: ghcr.io/analogj/scrutiny:master-omnibus

cap_add:

- SYS_RAWIO

- SYS_ADMIN

ports:

- "6090:8080" # webapp

- "8086:8086" # influxDB admin

volumes:

- /run/udev:/run/udev:ro

- /volume1/docker/scrutiny:/opt/scrutiny/config

- /volume1/docker/scrutiny/influxdb:/opt/scrutiny/influxdb

devices:

- /dev/nvme0n1:/dev/nvme0n1

- /dev/nvme1n1:/dev/nvme1n1

- /dev/sata1:/dev/sata1

- /dev/sata2:/dev/sata2

- /dev/sata3:/dev/sata3

- /dev/sata4:/dev/sata4

- /dev/sata5:/dev/sata5

- /dev/sata6:/dev/sata6

- /dev/sata7:/dev/sata7

- /dev/sata8:/dev/sata8

environment:

- SCRUTINY_WEB_INFLUXDB_TOKEN=ANYLONGSTRING

- SCRUTINY_WEB_INFLUXDB_INIT_USERNAME=A-USERNAME

- SCRUTINY_WEB_INFLUXDB_INIT_PASSWORD=A-PASSWORD

network_mode: synobridge

restart: unless-stoppedAs you can see the devices section contains all our drives, you will need to amend this again in line with the config file you created earlier. You will need to amend the paths each side of the : so they match, adding or removing drives accordingly including the NVMEs.

e.g., /dev/sata1:/dev/sata1 or /dev/sda:/dev/sda and so on.

In addition to this you will see in the ‘environment’ section three variables that will need to be updated as outlined below, these secure the database used by scrutiny.

| Variable | Value |

|---|---|

| SCRUTINY_WEB_INFLUXDB_TOKEN | enter a sting of characters you can use almost anything treat it like a password so a nice long string |

| SCRUTINY_WEB_INFLUXDB_INIT_USERNAME | This can be anything you like |

| SCRUTINY_WEB_INFLUXDB_INIT_PASSWORD | a secure password |

These 3 values are only required for the first ever setup – you can remove them once Scrutiny is up and running.

Once you have made the edits save this file as ‘scrutiny.yml’ in ‘/docker’

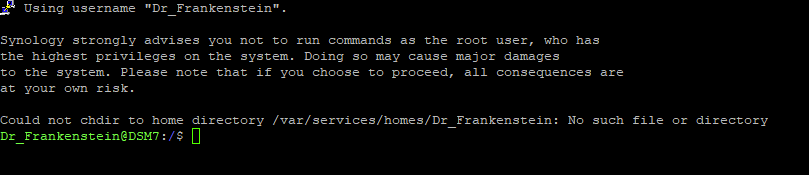

SSH and Docker-Compose

We are now on the final section, you can now log back into your NAS via SSH again.

Once you have logged in you will need to give 2 commands, you can copy and paste these one at a time — you will need to enter your password for the command starting with ‘sudo’

First we are going to change directory to where the scrutiny.yml is located, type the below and then press enter.

cd /volume1/dockerThen we are going to instruct Docker Compose to read the file we created and complete the set-up of the container. Again type or copy the below and press enter.

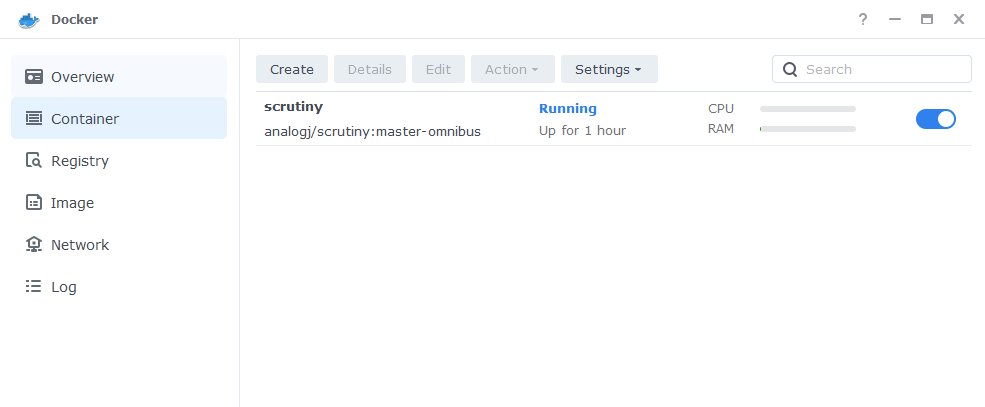

sudo docker-compose -f scrutiny.yml up -dWhen the command has completed you should be able to see Scrutiny running in the list of containers in the Synology GUI.

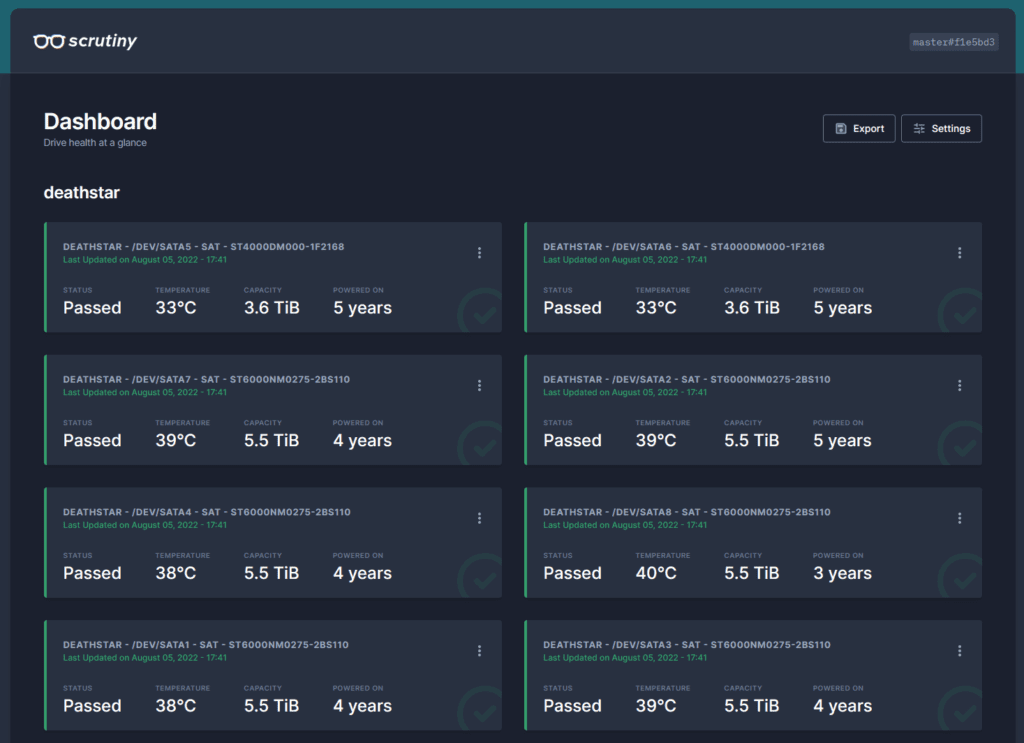

You should now be able to access the Scrutiny WebUI by going to your NAS IP followed by port 6090

e.g., 192.168.0.30:6090

Sometimes it can take a few minutes before all your drives appear, as Scrutiny needs to obtain their information so don’t panic if it’s initially empty. You can now adjust settings for the UI and Notifications in the WebUI.

Looking for some help, join our Discord community

If you are struggling with any steps in the guides or looking to branch out into other containers join our Discord community!

Buy me a beverage!

If you have found my site useful please consider pinging me a tip as it helps cover the cost of running things or just lets me stay hydrated. Plus 10% goes to the devs of the apps I do guides for every year.

Thanks for the tutorial. It worked, but I had to stop the container and set it to “high privileges” for scrutiny to display values. Otherwise the webpage was blank (I mean with an empty Dashboard section). Using a DS920+, DSM 7.2, deployed it using the Project feature of the Container manager package. Looks like the “cap_add” values SYS_ADMIN and SYS_RAWIO were not enough. Problem is, I don’t know how to add the “high privileges” mode directly in the compose file or, better, find another “cap_add” which would allow scrutiny to work without using high privileges mode. Any suggestion?

They should be sufficient to pull the data, remove the privilege as you don’t want any containers having that level of access to the system.

Sometimes it can take a little while for the metrics to appear on the first setup or if you swap a drive.

Never mind. I got it to work. 🙂

This is a great tool for those who need constant information from the system.

To me personally, the dashboards does not display any device. Any ideas?

It works perfectly. Thanks

And for a USB disk how could it be added ?

Good question 🙂 I will add this onto the guide as an optional step

Generally each USB drive will be mounted to /dev/USB1 and so on. This means you need to add two lines across the config.

In the collector.yaml add another drive

– device: /dev/usb1

type: ‘sat’

in the compose yaml

– /dev/usb1:/dev/usb1

After a minute or two the drive should appear. The only caveat is that the USB caddy has to support passing SMART data which some do not..

Working perfect. Thanks.

Hello

Thank you for this guide.

I had to add extra steps in order to make it work on my ds1821+ :

1. run docker compose, then log in the influxdb instance : for the user created by environnement variables, I had to create the ‘scrutiny’ organisation (there was an error in the container logs)

2. the token is created by the influxdb instance : so I got to copy it from the influx db instance, paste it in the docker compose and rebuild the stack

Hey, I will do a fresh installation to see if I can repeat this issue. Did you add the three environment variables on the first launch as that would allow you to create your own token without the need to go into the database at all.

Sorry for posting late. I came back to your blog to check if you had a part on the notification function (and especially the script one).

to answer : I followed your guide line by line, so before the 1st start of the container, I had given a string in the SCRUTINY_WEB_INFLUXDB_TOKEN env variable.