Important or Recent Updates

| Historic Updates | Date |

|---|---|

| First version of the setup | 01/08/2021 |

| Updated the general formatting Gave the guide a once over with LanguageTool | 14/03/2022 |

| Added ‘books’ | 15/07/2022 |

| DSM7.2 Update for Container Manager | 22/04/2023 |

| Adjusted screenshot for DSM7.2.1 | 30/04/2024 |

| Amended the first section of this guide to accommodate those using NVMe volumes. | 31/10/2024 |

Container Manager Install

When installing Container Manager for the first time it will automatically create your docker shared folder, so there is no need to create this manually. If you have an NVMe or SATA SSD volume make sure you install the package to it for optimal performance.

Docker path adjustments for NVMe or SATA SSD volumes

If you are using a NVMe or SSD volume you will need to adjust the storage volume shown in each guide for any /docker share to the correct volume number for your fast storage. The /data share remains on your larger hard drive volume.

For example for Plex it would look like this assuming your volume numbers are volume 1 (hard drives) volume 2 (NVMe/SSD)

volumes:

- /volume2/docker/plex:/config

- /volume1/data/media:/data/mediaMedia Folder Set up

When passing folders into a Docker container each mount point is treated as its own filesystem.

This means when moving files between two mount points Docker will do a Copy, then Delete operation. Meaning you are creating a full duplicate of a file while its being ‘moved’ creating unwanted disk IO and temporarily taking up double the space. (especially when seeding torrents after copying to the final folders)

In order to avoid this we need to set up a clean directory structure that allows us to just have one folder or share mounted to our containers.

If you are starting with these guides and have existing media you may need to move around your data in line with this setup, I recommend creating all the folders below using File station to avoid potential permission issues later.

Directory Structure

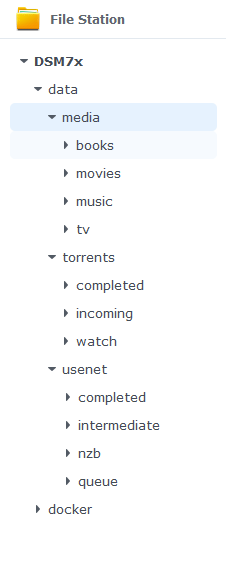

With this setup all of your media will sit within a single share, you can call this whatever you want but in all the guides it will be called ‘data’. If you change its name you will need to adjust any steps or compose files from my guides accordingly.

Please note: it is important that you make a decision around whether to use lowercase or Uppercase folder names, I recommend just stick with lowercase everywhere as it simplifies setup. If you decide to go against this ensure you change all the volume maps in the guides to match the case! Otherwise, containers and you will be very confused.

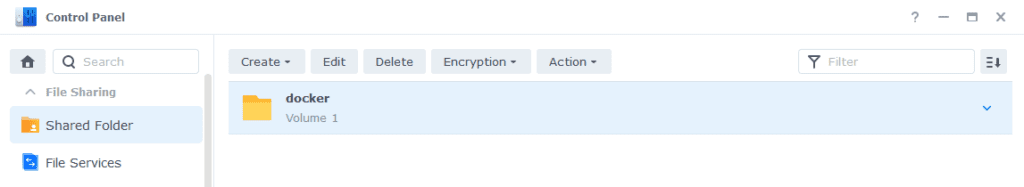

Open up the control panel then in ‘Shared Folder’ hit ‘Create’

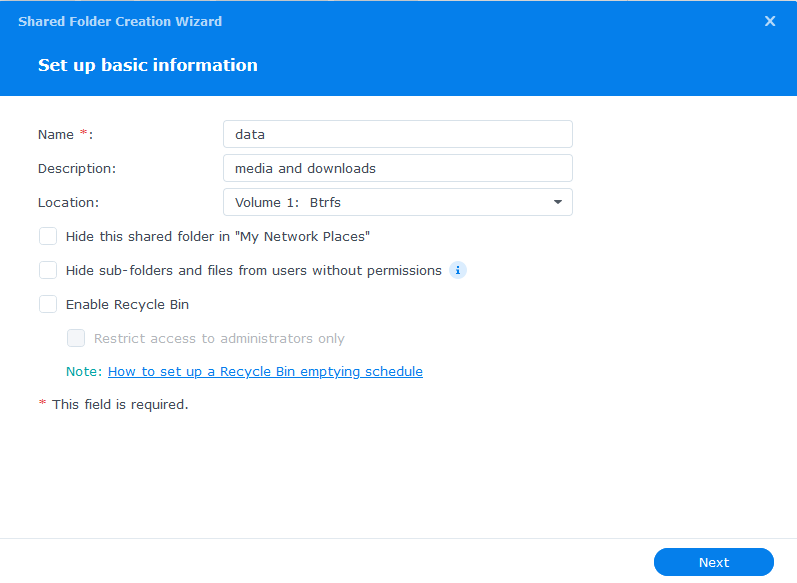

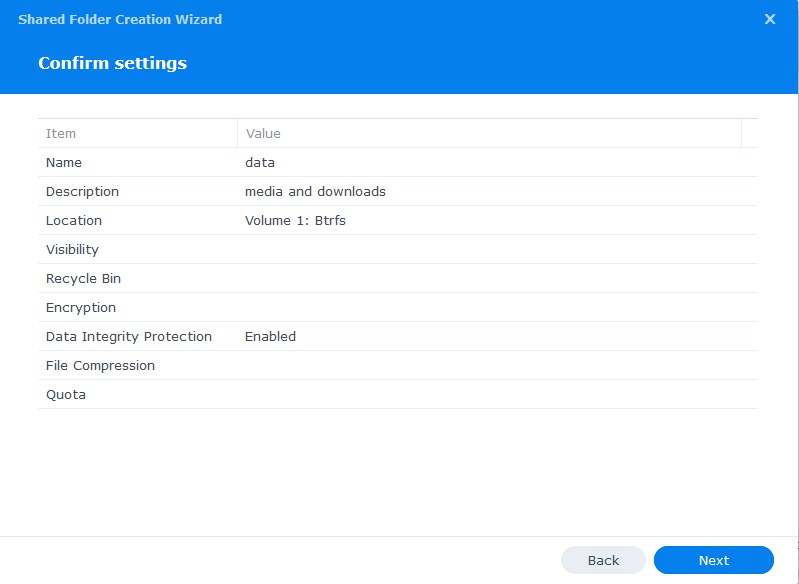

Screen 1 – fill out the name as ‘data’ and use Volume 1 for its storage location (Your main hard drive volume)

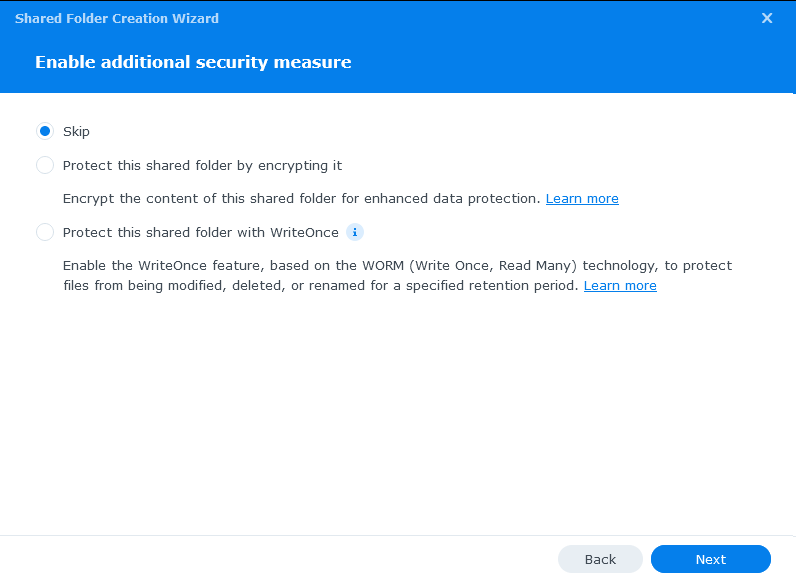

Screen 2 – we leave the folder unencrypted and don’t enable write once features

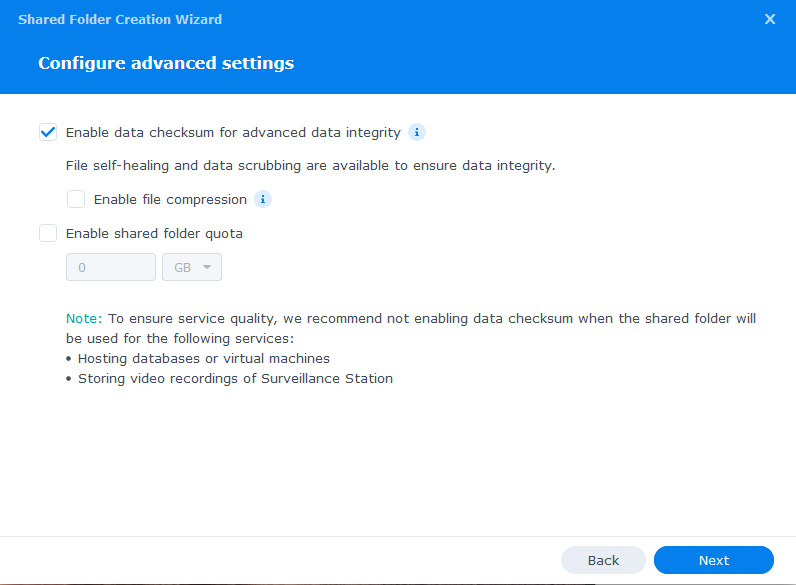

Screen 3 – Enable data checksums as this will help catch any issues with files during data scrubbing. (These options will not appear if you have ext4 file system – If you are starting this with a clean NAS go back change to BTRFS if possible)

Screen 4 – just click next past the summary

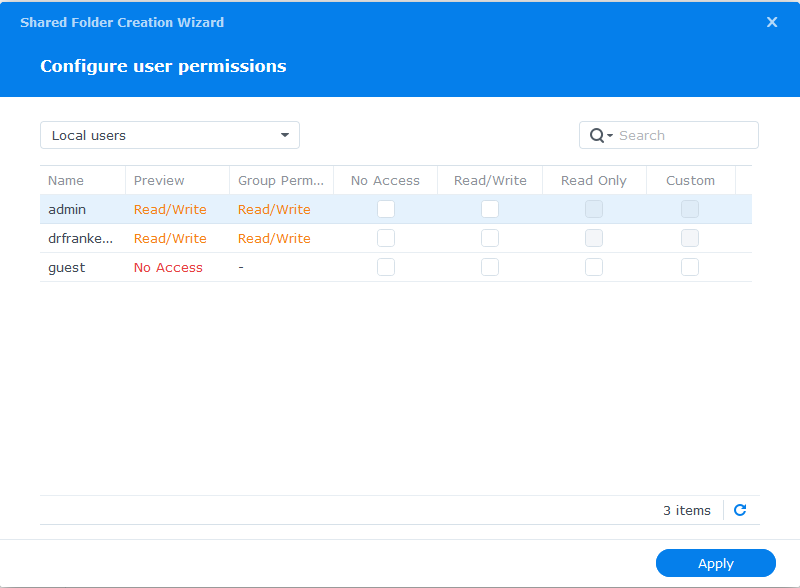

On the final screen we don’t need to change any user permissions yet, we will do that in the second guide.

The Sub-folders

Now we have our main ‘data’ directory we need to create a number of sub-folders to hold our media and downloaded files. You can amend this depending on whether you are using Torrents or Usenet (Newsgroups) or both.

You will initially create 3 folders within /data

- media

- torrents

- usenet

Then under /data/media create

- movies

- music

- tv

- books

Then under /data/torrents create

- completed

- incoming

- watch

Then under /data/usenet create

- completed

- intermediate

- nzb

- queue

Your folder tree should now look like this.

Finally, within the /docker shared folder create a folder called ‘projects‘ which will be used for any containers using the Projects (Docker Compose) feature of Container Manager.

You can now go back to where you left off in the guides and do the next step.

Looking for some help, join our Discord community

If you are struggling with any steps in the guides or looking to branch out into other containers join our Discord community!

Buy me a beverage!

If you have found my site useful please consider pinging me a tip as it helps cover the cost of running things or just lets me stay hydrated. Plus 10% goes to the devs of the apps I do guides for every year.

Something is not clear.

You are suggesting Nvme disks for performance boost and warn against using them as cache drives. And you are creating this directory structre under HDDs.

So on what purpose we will use the Nvme SSDs?

Can we separate this shared “data” directory and place “docker” under the SSD and “media” on HDD? Is it possible.

Also can you also add an “Emby” guide in addition to Plex and Jellyfin. I have tried all of them and Emby was the only one that can direct play 10 bit x264 videos without the need for transcoding. Its client support is better than Jellyfin and UI is much better.

Hi John

This is a very recent addition to the recommendations as of the 13th as seeing more and more people make this jump. I will review the wording in this guide and the screenshots to make it clearer, the main part is the Container Manager package installation as that defines where the docker share ends up.

1) Set up the volume on the NVMEs (SSDS) using the scripts mentioned if unsupported.

2) Install Container Manager on the second volume (/volume2)

3) This will automatically create the /docker folder on this volume and data remains on the HDDs /volume1

From there in the compose/yaml for each guide you amend anything for /volume1/docker to /volume2/docker the rest remains the same.

Let me see what I can do on the Emby side of things I tried it out many years ago so will spin it up as it should be really similar guide wise. I will have a look over the weekend 🙂

Does it make sense to make a separate Audiobook folder (if I have a lot of them) in /data/media/ .

Or should i put the audiobooks in the books folder?

I would split them personally – I don’t have many but keep them separate from my books

noob following your guide and wanted to point out I was frantically reading and scrolling back through pages when I got to the image showing a ‘docker’ folder – as I didn’t have one! Please maybe highlight or asterisk the step about installing Container Manager first (I missed it when I first skimmed through the text) and maybe mention it should automatically create the ‘docker’ shared folder. Thank you.

Hey – Thanks for the suggestion, it’s quite timely as I am just reviewing/reworking the page about which package is for which DSM version and the Memory recommendations. Its likely I will do something like this so for someone new they follow step 0 once and then skip for all other guides..

Step 0: Container Manager Install and Performance Recommendations (SSD and Memory)

Step 1: Directories….

Hi,

I’m new to Synology and really like this setup. Since coming from Unraid, my initial plan was to use 2x SSDs for the downloads as a volume to increase unpacking and then move the files to the HDDs on a different volume. This is not possible with following this path, or is it?

On Unraid you can easily manage that, since a shared folder can span an array and a cache pool. Is there an equivalent here?

Hey Luke – I do something similar here using an old SSD on esata for the temp location for my Usenet downloads.

You would need to setup a separate volume for the NVME(SSD) pool, if they are not official sticks use this script to add them to the hard drive database and the UI will let you create the separate volume

https://github.com/007revad/Synology_HDD_db

After that you can just set up a shared folder on that volume for example /volume2/unpacks (name the shared folder however you wish)

Then add this as an extra mount point for the containers you want to use it

– /volume2/unpack:/unpack and set the folder in sabnzbd for example.

Hope that helps

Can I just use 2 Cache SSDs to store the usenet temp downloads insteading of creating a pool via the script?

You don’t really have any control of what hits the write cache (assuming you have read/write cache enabled). You would be better off getting rid of the cache as it’s largely a waste of time for home use. Just set up actual SSD volumes which give you complete control on what goes to SSD Keep in mind that writing a ton of Usenet data will wear the SSD out quicker. I personally have a crappy old SATA SSD on my e-sata port purely for Usenet unpacking (You can stick something on USB as well!)

Thank you so much for all the info you provided. I just installed 2 x 2TB NvMe SSDs, ran the script, and created a new volume for them. I mainly wanted to use it for download onto it, and unpacking, then maybe later on moving my media to the HDD (vol1) for Plex usage. I used RAID0 since these files aren’t very important to me and I would rather use max storage instead.

I’m a bit confused though. Your guide is mainly showing that only the docker container would be on the SSD. Is there any benefit of that really? Shouldn’t the downloads folder be on the SSD for processing, then moved to HDD?

There is a massive benefit from the containers being on the SSD. All the random reads and writes for the containers themselves and then each app within them will result in much faster load times. On the secondary point you can definitely move either all downloads or at least the random writes to SSD. The SSD I use for my temp folder in SABNZBD takes the random write hit from the thousands of posts that are pulled. And then I actually unpack directly to my hard drive volume as my drives can easily take the large contiguous write easily. (could do this for torrents as well, but you have to consider seeding and using up the space)

Two ways you can go about this

1) Create a new shared folder called ‘downloads’ (or whatever you want) on the new volume then add this as an additional mount point to the container

– /volume2/downloads:/downloads

Then set you downloader of choice to use this folder for its incomplete (and even completed) files. Which the Arrs can then perform a move into your final /data folder.

2) if you just want all the temp (random writes) going to SSD and then unpack into /data no additional mounts would be required, just create a folder in /docker/sabnzbd/incomplete and then within Sab set the temp folder to /config/incomplete (this is where its mounted)

Lots a way to skin a cat, so you could do this other ways as well.

Hi there. I’m only just beginning to follow your guides but so far (this one lol) it is straight forward and easy to understand. I have been trying to understand docker for about 3 years (on and off) and still find it frustrating. Mostly because guides i try to follow always become vague over certain steps and i just can’t figure out what to do in that step. HOPEFULLY it won’t be the case with your guides.

I just wanted to say THANK YOU for taking the time to produce these guides!

Hey, no worries – there is certainly a learning curve to this – take your time and don’t skip any reading of the steps and you should be good. Always check the FAQs if something doesn’t initially go as expected.. happy to help if you get stuck or jump on Discord as we have a ton of other helpful people at this point if I am not around!